Newsletter

Get the latest from Weapons and Warfare right to your inbox.

Follow Us

Explore

Weapons

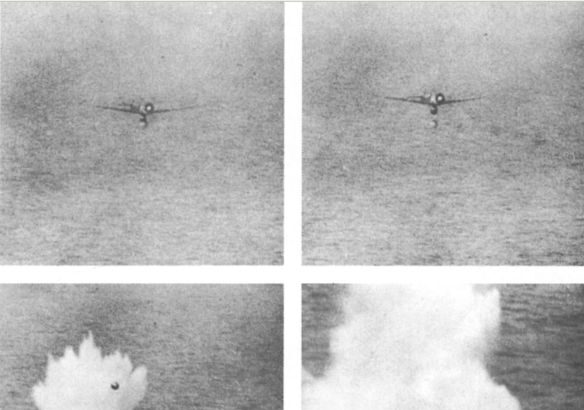

Kurt Bouncing Bomb

The only known pictures of the SB 800 Kurt bouncing bomb. An FW 190 drops two bombs, which bounce over the sea for a considerable distance before sinking to explode hydrostatically. Special bomb SB 800 RS known also as Prismen Rollbombe “Kurt” 1 and 2 for attacking the dams of water reservoirs. This was undoubtedly copied from the famous Barnes…

Most Recent

Mongol Archery

Originally the basis of the Mongols’ military power and later almost driven to extinction by the advent of firearms, archery has been revived in Mongolia as a purely recreational sport. Mongolian archery in the Middle Ages had great military significance. The earliest surviving piece of Mongolian writing is a stone…

WWII USN Torpedoes

“Damn those exploders…damn them all to hell!” exclaimed the skipper of submarine Jack, Lieutenant Commander Thomas Michael Dykers, on June 20, 1943, as he watched through the periscope and saw a torpedo, fired from an excellent position and at the optimal range of 1,000 yards, “premature” (explode before reaching its…

Motobomba FFF

The Motabomba, or more properly the Motobomba FFF (Freri Fiore Filpa), was a torpedo used by Italian forces during World War II. The designation FFF was derived from the last names the three men involved with its original design: Lieutenant-Colonel Prospero Freri, Captain-Disegnatore Filpa, and Colonel Amedeo Fiore. The FFF…

‘Istrebitel Sputnikov’ (IS)

An artist’s conception of a Soviet anti-satellite weapon destroying a satellite in 1984. The Russian satellite destroyer known as ‘Istrebitel Sputnikov‘ (IS). Carrying an explosive charge, it would be guided on an intercept course towards enemy satellites. America’s ability to gather intelligence on Soviet developments was severely limited during the…

ASDIC

This pictorial illustrates the shape of the detection area for the 144 ASDIC, the ‘Q; attachment and the 147 Asdic. Click on graphic to enlarge. From “Anti- Submarine Detection Investigation Committee,” dating to British, French, and American anti-submarine warfare research during World War I. Known as ASDIC (Admiralty’s Anti-Submarine Division)…

Hitler’s Saw

MG-34 MG-42 The MG-42 was designed during World War II as a replacement for the multipurpose MG-34, which was less than suitable for wartime mass production and was also somewhat sensitive to fouling and mud. It was manufactured in great numbers by companies like Grossfuss, Mauser-Werke, Gustloff-Werke, Steyr-Daimler-Puch, and several…

Most Popular

The Frankish Way of War

The kingdoms and peoples of Europe and North Africa just before the East Roman Emperor…

WWII US Army Trucks

WWII for the US was the first completely mechanized war. There were tanks, jeeps, tanks,…

Renaissance Warfare I

As more centralized governments developed during the Later Middle Ages (1000-1500), significant changes took place…