THE RIGHT STUFF

Understanding these calculations was the easy part. There wasn’t any great “secret” to atomic energy (and there isn’t now). Physicists at the time in the United States, Great Britain, Russia, Germany, Italy, and Japan all quickly grasped the significance of nuclear fission. The hard part, and this is still true today, is producing the materials that can sustain this chain reaction. Some concluded that the material could not be made, or at least not made in time to affect the course of the war. Others disagreed—among them the influential authors of the MAUD committee report. The crucial difference in the United States was not superior scientific expertise but the industrial capability to make the right materials. Groves used this capability to build by the end of the war the manufacturing equivalent of the American automobile industry—an entirely new industry focused on creating just one product.

To understand the challenge the United States faced then, and which other nations who want nuclear weapons face today, we have to delve a little deeper into atomic structures. Ordinary uranium cannot be used to make a bomb. Uranium, like many other elements, exists in several alternative forms, called isotopes. Each isotope has the same number of protons (and so maintains the same electric charge) but varies in the number of neutrons (and thus, in weight). Most of the atoms in natural uranium are the isotope U-238, meaning that they each have 92 protons and 146 neutrons for a total atomic weight of 238. When an atom of U-238 absorbs a neutron, it can undergo fission, but this happens only about one-quarter of the time. Thus, it cannot sustain the fast chain reaction needed to release enormous amounts of energy. But one of every 140 atoms in natural uranium (about 0.7 percent) is of another uranium isotope, U-235. Each U-235 nucleus has 92 protons but only 143 neutrons. This isotope will fission almost every time a neutron hits it. The challenge for scientists is to separate enough of this one part of fissile uranium from the 139 parts of non-fissile uranium to produce an amount that can sustain a chain reaction. This quantity is called a critical mass. The process of separating U-235 is called enrichment.

Almost all of the $2 billion spent on the Manhattan Project (about $23 billion in 2006 dollars) went toward building the vast industrial facilities needed to enrich uranium. The Army Corps of Engineers built huge buildings at Oak Ridge, Tennessee, to pursue two different enrichment methods. The first was gaseous diffusion. This process converts the uranium into gas, then uses the slightly different rates at which one isotope diffuses across a porous barrier to separate out the U-235. The diffusion is so slight that it requires thousands of repetitions—and hundreds of diffusion tanks. Each leg of the U-shaped diffusion plant at Oak Ridge was a half-mile long.

The other system was electromagnetic separation. Again, the uranium is converted into a gas. It is then moved through a magnetic field in a curved, vacuum tank. The heavier isotope tends to fly to the outside of the curve, allowing the lighter U-235 to be siphoned off from the inside curve. Again, this process must be repeated thousands of times to produce even small quantities of uranium rich in U-235. Most of the uranium for the bomb dropped on Hiroshima was produced in this way.

Both of these processes are forms of uranium enrichment and are still in use today. By far the most common and most economical method of enriching uranium, however, is to use large gas centrifuges. This method (considered but rejected in the Manhattan Project) pipes uranium gas into large vacuum tanks; rotors then spin it at supersonic speeds. The heavier isotope tends to fly to the outside wall of the tank, allowing the lighter U-235 to be siphoned off from the inside. As with all other methods, thousands of cycles are needed to enrich the uranium. Uranium enriched to 3–5 percent U-235 is used to make fuel rods for modern nuclear power reactors. The same facilities can also enrich uranium to the 70–90 percent levels of U-235 needed for weapons.

There is a second element that can sustain a fast chain reaction: plutonium. This element is not found in nature and was still brand-new at the time of the Manhattan Project. In 1940, scientists at Berkeley discovered that after absorbing an additional neutron, some of the U-238 atoms transformed into a new element with 93 protons and an atomic weight of 239. (The transformation process is called beta-decay, where a neutron in the nucleus changes to a proton and emits an electron.) Uranium was named after the planet Uranus. Since this new element was “beyond” uranium, they named it neptunium after the next planet in the solar system, Neptune. Neptunium is not a stable element. Some of it decays rapidly into a new element with 94 protons. Berkeley scientists Glenn Seaborg and Emilio Segré succeeded in separating this element in 1941, calling it plutonium, after the next planet in line, Pluto.

Plutonium-239 is fissile. In fact, it takes less plutonium to sustain a chain reaction than uranium. The Manhattan Project thus undertook two paths to the bomb, both of which are still the only methods pursued today. Complementing the uranium enrichment plants at Oak Ridge, the Project built a small reactor at the site and used it to produce the first few grams of plutonium in 1944. The world’s first three large-scale nuclear reactors were constructed that year in just five months in Hanford, Washington. There, rods of uranium were bombarded with slow neutrons, changing some of the uranium into plutonium. This process occurs in every nuclear reactor, but some reactors, such as the ones at Hanford, can be designed to maximize this conversion process.

The reactor rods must then be chemically processed to separate the newly produced plutonium from the remaining uranium and other highly radioactive elements generated in the fission process. This reprocessing typically involves a series of baths in nitric acid and other solvents and must be done behind lead shielding with heavy machinery. The first of the Hanford reactors went operational in September 1944 and produced the first irradiated slugs (reactor rods that had been bombarded with neutrons) on Christmas Day of that year. After cooling and reprocessing, the first Hanford plutonium arrived in Los Alamos on February 2, 1945. The lab had gotten its first 200 grams of U-235 from Oak Ridge a year earlier and it now seemed that enough fissile material could be manufactured for at least one bomb by August 1945.

The Manhattan Project engineers and scientists had conquered the hardest part of the process—producing the material. But that does not mean that making the rest of the bomb is easy.

BOMB DESIGN

The two basic designs for atomic bombs developed at Los Alamos are still used today, though with refinements that increase their explosive yield and shrink their size.

In his introduction lectures, Robert Serber explained the basic problem that all bomb designers have to solve. Once the chain reaction begins, it takes about 80 generations of neutrons to fission a whole kilogram of material. This takes place in about 0.8 microseconds, or less than one millionth of one second. “While this is going on,” Serber said, “the energy release is making the material very hot, developing great pressure and hence tending to cause an explosion.”

This is a bit of an understatement. The quickly generated heat rises to about 10 billion degrees Celsius. At this temperature the uranium is no longer a metal but has been converted into a gas under tremendous pressure. The gas expands at great velocity, pushing the atoms further apart, increasing the time necessary for neutron collisions, and allowing more neutrons to escape without hitting any atoms. The material would thus blow apart before the weapon could achieve full explosive yield. When this happens in a poorly designed weapon it is called a “fizzle.” There is still an explosion, just smaller than designed and predicted.

Led by Robert Oppenheimer, the scientific teams developed two methods for achieving the desired mass and explosive yield. The first is the gun assembly technique, which rapidly brings together two subcritical masses to form the critical mass necessary to sustain a full chain reaction. The second is the implosion technique, which rapidly compresses a single subcritical mass into the critical density.

The gun design is the least complex. It basically involves placing a subcritical amount of U-235 at or around one end of a gun barrel and shooting a plug of U-235 into the assembly. To avoid a fizzle, the plug has to travel at a speed faster than that of the nuclear chain reaction, which works out to about 1,000 feet per second. The material is also surrounded by a “tamper” of uranium that helps reflect escaping neutrons back into the bomb core, thus reducing the amount of material needed to achieve a critical mass.

The nuclear weapon that the United States dropped on Hiroshima, Japan, on August 6, 1945, was a gun-type weapon. Called “Little Boy,” the gun barrel inside weighed about 1,000 pounds and was six feet long. The science was so well understood, even at that time, that it was used without being explosively tested beforehand. Today, this is almost certainly the design that a terrorist group would try to duplicate if they could acquire enough highly enriched uranium. The Hiroshima bomb used 64 kilograms of U-235.15 Today, a similar bomb could be constructed with approximately 25 kilograms, in an assembled sphere about the size of a small melon.

Gun-design weapons can use only uranium as a fissile material. The chain reaction in plutonium proceeds more rapidly than the plug can be accelerated, thus causing the device to explode prematurely. But plutonium can be used in another design that uniformly compresses the material to achieve critical mass (as can uranium). This is a more complex design but allows for a smaller device, such as those used in today’s modern missile warheads. The implosion design was used in the first nuclear explosion, the Trinity test at Alamogordo, New Mexico, on July 16, 1945, and in the “Fat Man” nuclear bomb dropped on Nagasaki, Japan, on August 9, 1945.

The implosion method of assembly involves a sphere of bomb material surrounded by a tamper layer and then a layer of carefully shaped plastic explosive charges. With exquisite microsecond timing, the explosives detonate, forming a uniform shock wave that compresses the material down to critical mass. A neutron emitter at the center of the device (usually a thin wafer of polonium that is squeezed together with a sheet of beryllium) starts the chain reaction. The Trinity test used about 6 kilograms of plutonium, but modern implosion devices use approximately 5 kilograms of plutonium or less—a sphere about the size of a plum.

By Spring 1945 the Los Alamos scientists were franticly rushing to assemble what they called the “gadget” for the world’s first atomic test. Although they had spent years in calculation, the staggering 20-kiloton magnitude of the Trinity explosion surpassed expectations. Secretary of War Henry Stimson received word of the successful test while accompanying President Truman at the Potsdam Conference. At the close of the conference, Truman made a deliberately veiled comment to Stalin, alluding to a new U.S. weapon. The Soviet premier responded with an equally cryptic nod and “Thank you.”

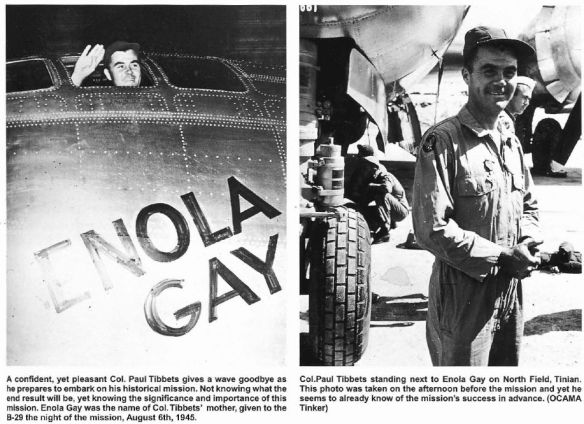

Back in the U.S. the wheels were in motion, and the first atomic bomb, “Little Boy,” was on a ship headed to Tinian, an island off the coast of Japan. In the months leading up to Trinity, top government officials had selected targets and formed a policy of use. The eight-member Interim Committee, responsible for A-bomb policy and chaired by Stimson, concluded that “we could not give the Japanese any warning; that we could not concentrate on a civilian area; but that we should seek to make a profound psychological impression on as many of the inhabitants as possible . . . [and] that the most desirable target would be a vital war plant employing a large number of workers and closely surrounded by workers’ houses.” On August 6, 1945, Little Boy exploded with a force of 15 kilotons over the first city on the target list, Hiroshima.

DROPPING THE BOMB

To this day, the decision to drop the bomb on Japan remains controversial and historians continue to dispute the bomb’s role in ending the Pacific war. The traditional view argues that Truman faced a hellish choice: use the bomb or subject U.S. soldiers to a costly land invasion. Officials at the time did not believe that Japan was on the verge of unconditional surrender, and the planned land invasion of the home islands would have resulted in extremely high casualties on both sides. The months preceding the atomic bombings had witnessed some of the most horrific battles of the war in the Pacific, with thousands of U.S. troops dying in island assaults. Historians Thomas B. Allen and Norman Polmar write:

Had the invasions occurred, they would have been the most savage battles of the war. Thousands of young U.S. military men and perhaps millions of Japanese soldiers and civilians would have died. Terror weapons could have scarred the land and made the end of the war an Armageddon even worse than the devastation caused by two atomic bombs.

Immediately after the bombing of Hiroshima and Nagasaki, there was significant moral backlash, expressed most poignantly in the writings of John Hersey, whose gripping story of six Hiroshima residents on the day of the bombing shocked readers of the New Yorker in 1946. But the debate was not over whether the bombing was truly necessary to end the war. It was not until the mid-1960s that an alternate interpretation sparked a historiographical dispute. In 1965, Gar Alperovitz argued in his book Atomic Diplomacy that the bomb was dropped primarily for political rather than military reasons. In the summer of 1945, he says, Japan was on the verge of surrender. Truman and his senior advisors knew this but used the atomic bomb to intimidate the Soviet Union and thus gain advantage in the postwar situation. Some proponents of this perspective have disagreed with Alperovitz on the primacy of the Soviet factor in A-bomb decision making, but have supported his conclusion that the bomb was seen by policy makers as a weapon with diplomatic leverage.

A middle-ground historical interpretation, convincingly argued by Barton Bernstein, suggests that ending the Pacific war was indeed Truman’s primary reason for dropping the bomb, but that policy makers saw the potential to impress the Soviets, and to end the war before Moscow could join an allied invasion, as a “bonus.” This view is buttressed by compelling evidence that most senior officials did not see a big difference between killing civilians with fire bombs and killing them with atomic bombs. The war had brutalized everyone. The strategy of intentionally attacking civilian targets, considered beyond the pale at the beginning of the war, had become commonplace in both the European and Asian theaters. Hiroshima and Nagasaki, in this context, were the continuation of decisions reached years earlier. It was only after the bombings that the public and the political leaders began to comprehend the great danger the Manhattan Project had unleashed and began to draw a distinction between conventional weapons and nuclear weapons.